Are Machines Racist?

This article explores racism in machines.

It all started with a Youtube video where a black person and his white friend were in a bathroom using an automatic soap dispenser. When the white friend put his hand out, soap squirted into his hand. But when the black friend stuck his hand out, nothing came out.

“Black hand, nothing,” he was heard saying, as his white friend was able to get soap every time.

The question is, are we automating machines like these to be racist?

Vox tested a Twitter algorithm that everyone was talking about – automatically choosing the white face over a black face in a vertical image. If there was a photo of a white man and black man, one face above the other, Twitter’s algorithm seemed to always pick the white face to display.

A company called Gradio, co-founded by Dawood Khan, created an algorithm similar to what they think Twitter is doing. They used something called saliency, which is a term for what parts of the specific photo people noticed more. By tracking people’s eye movements during a photo viewing, they were able to generate saliency percentages for certain points in an image.

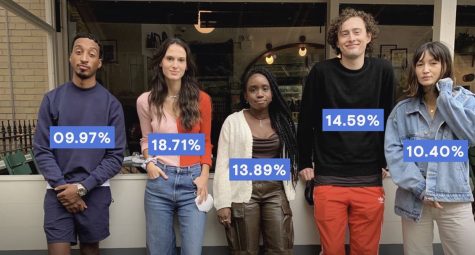

Check this photo out, for example.

The percentages highlighted in purple are the saliency rates for each person in the photo. If someone were to view this image, most eyes would fall directly on the woman in the coral/red shirt, since she has the highest saliency rate. Many people are noticing that people with lighter skin naturally have higher saliency rates.

So, in the end, machines can be racist. They have specific formulas to follow, and they learn from those formulas. If they learn the wrong way, however, they may adopt practices from those formulas that are undesirable. Our job is to teach machines the right way.

RELATED STORIES: